Although technically it is now possible to drive autonomously, legislative progress remains an issue (Picture copyright Daimler)

Jason Barnes looks at Daimler’s Intelligent Drive programme to consider how machine vision has advanced the state of the art of vision-based in-vehicle systems

Traditionally, radar was the in-vehicle Driver Assistance System (DAS) technology of choice, particularly for applications such as adaptive cruise control and pre-crash warning generation. Although vision-based technology has made greater inroads more recently, it is not a case of ‘one sensor wins’. Radar and vision are complementary and redundancy of sensors will be needed for both current DAS functionalities and future autonomous driving. It should be noted that radar-based sensors have also continued to develop and other solutions, such as laser, continue to be highly relevant.

The different types of sensor technologies used in vehicle-based safety systems may continue to battle for predominance but there can be little doubt that machine-based solutions have done much to address the concerns of their detractors in recent years.

A decade ago, non-safety issues such as form factor were a strong argument against vision-based systems’ use – buyers would simply not accept the aesthetic compromises needed to incorporate systems which could save lives into vehicles, according to critics. But come forward to the present day and the benefits of such systems have swayed the argument – helped by appreciable decreases in camera unit size and significant increases in system performance.

We can look back on some amazing progress over the last few years. If, for example, we place the features list of the1685 Mercedes-Benz S-Class of maybe five/six years ago next to that of the current model we can see a large increase in the presence of machine vision-based DAS, both in terms of gradual improvements of previous functionalities and the appearance of altogether new ones.

The 2013 Mercedes-Benz S-Class model features vision-based safety- and convenience-related functionalities including lane detection, speed limit and other traffic sign detection, night vision, pedestrian and animal detection, and automatic high beam adjust, which prevents the host vehicle’s lights from blinding the drivers of on-coming vehicles. The 2013 S-Class’s brake Assist BAS Plus, incorporates Cross Traffic Assist, designed to intervene – if necessary – at urban intersections in order to prevent low-speed collisions. Pedestrian detection, meanwhile, has developed to the point where it provides fully autonomous braking assistance should that be required (see <%$Linker:2 External <?xml version="1.0" encoding="utf-16"?><dictionary /> 0 0 0 oLinkExternal Pedestrian Detection http://www.gavrila.net/Research/Pedestrian_Detection/pedestrian_detection.html false http://www.gavrila.net/Research/Pedestrian_Detection/pedestrian_detection.html false false %>)

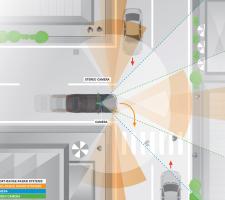

Significantly, the 2013 S-Class introduces stereo vision. This works in conjunction with on-board radar systems – two of them – and consists of two minute cameras mounted high in the windscreen some 20 centimetres apart within the central rear-view mirror. Stereo vision facilitates 3D imaging and functionalities such as road surface estimation – bumps and potholes can be detected and the suspension settings on each of the S-Class’s wheels adjusted automatically to give a smoother ride.

For the first time this year,2069 Daimler is offering Steering Assist, which sees the car start to drive autonomously because it can take control in both longitude and latitude and stay within lanes by itself. Daimler says that the vehicle ahead can be used to provide a reference for ‘semi-autonomous following’ even where road markings are not visible.

Trickle-down is already happening. The 2013 E-Class, Mercedes Benz’s mid-range offering, will feature many of the same technologies as the S-Class and, from next year, we can expect to see some of them on the new C-Class (Mercedes-Benz’s volume car, and equivalent to the1731 BMW 3 Series/278 Ford Mondeo) as well. The same is happening in other vehicle manufacturers’ ranges.

1846 ITS International, Moore’s Law, applies – and the development of camera-based sensors with the high dynamic ranges and temperature robustness needed for the automotive environment. But it is stereo vision which is the game-changer because it allows precise computation of depth, according to Daimler. That increases by orders of magnitude the power of driver assistance, pre-crash and safety functionalities.

“Traffic sign recognition has been around for about nearly a decade. If we compare progress, then we’ve moved from a solution which generated several mistakes an hour to one is pretty much fool-proof. Pedestrian detection, if one compares 10 years of research, is several orders of magnitude better; the false-positive ‘ghosts’ are gone, courtesy of better vision solutions but also because of improved processing and algorithms,” says Dariu Gavrila, senior research scientist in the Department of Environmental Perception at Daimler AG.

In answer to the “What’s next?” question, he says that autonomous driving will be a focus of Daimler’s research in the coming years.

Driverless vehicles have grabbed many a headline recently, courtesy of the efforts of companies such as1691 Google. ITS industry commentators will point to trials extending back over decades but there are some important differentiators of current iterations. At the Frankfurt Motor Show this year, Daimler’s chairman Dieter Zetsche shared a stage with an S-Class which had just navigated an historically significant (for Daimler; it was the route which Bertha Benz took 125 years ago on the world’s first overland drive) 100 km journey from Mannheim to Pforzheim with the driver sitting pretty much hands-off. This takes previous demonstrations of vehicle autonomy, such as the US’s DARPA Urban Grand Challenge, several steps forward in that the S-Class was moving in a complex, real-world environment consisting of a variety of inter-urban and urban conditions. It also used realistic sensor technology, in that guidance was provided not by laser solutions based on military systems potentially costing more than the vehicle itself but by systems which are not too far removed from those to be found in vehicles already offered for sale to the public.

It is possible to envisage a structured environment in which vehicle autonomy is introduced gradually, starting with the highway and parking environments and progressing to those more complex situations. Indeed, it should be possible in the not-too-distant future for the person in the driving seat to accomplish some non-essential tasks. For example, in the absence of the ability to stop the practice completely in some parts of the world, texting while driving need not be the significant safety issue it is currently.

But in truth full autonomous driving is still a fair way off. A limiting factor at present is the legal framework, principally the Vienna Convention, which dictates that a driver remains in control of the vehicle at all times (and which governs that hands-on check, every 10 seconds, within Steering Assist). Changes to the law are going to be needed before we can fully realise the technology’s potential.

An overview of Daimler’s Intelligent Drive is provided <%$Linker:2 External <?xml version="1.0" encoding="utf-16"?><dictionary /> 0 0 0 oLinkExternal here Visit Mercedes-benz Intelligent drive false http://techcenter.mercedes-benz.com/_en/intelligent_drive/detail.html false false %>

A search on2178 Youtube for “Mercedes-Benz Intelligent Drive” produces a number of video clips, including ones of the autonomous driving on the memorial Bertha-Benz route from Mannheim to Pforzheim.

Traditionally, radar was the in-vehicle Driver Assistance System (DAS) technology of choice, particularly for applications such as adaptive cruise control and pre-crash warning generation. Although vision-based technology has made greater inroads more recently, it is not a case of ‘one sensor wins’. Radar and vision are complementary and redundancy of sensors will be needed for both current DAS functionalities and future autonomous driving. It should be noted that radar-based sensors have also continued to develop and other solutions, such as laser, continue to be highly relevant.

The different types of sensor technologies used in vehicle-based safety systems may continue to battle for predominance but there can be little doubt that machine-based solutions have done much to address the concerns of their detractors in recent years.

A decade ago, non-safety issues such as form factor were a strong argument against vision-based systems’ use – buyers would simply not accept the aesthetic compromises needed to incorporate systems which could save lives into vehicles, according to critics. But come forward to the present day and the benefits of such systems have swayed the argument – helped by appreciable decreases in camera unit size and significant increases in system performance.

We can look back on some amazing progress over the last few years. If, for example, we place the features list of the

The 2013 Mercedes-Benz S-Class model features vision-based safety- and convenience-related functionalities including lane detection, speed limit and other traffic sign detection, night vision, pedestrian and animal detection, and automatic high beam adjust, which prevents the host vehicle’s lights from blinding the drivers of on-coming vehicles. The 2013 S-Class’s brake Assist BAS Plus, incorporates Cross Traffic Assist, designed to intervene – if necessary – at urban intersections in order to prevent low-speed collisions. Pedestrian detection, meanwhile, has developed to the point where it provides fully autonomous braking assistance should that be required (see <%$Linker:

Significantly, the 2013 S-Class introduces stereo vision. This works in conjunction with on-board radar systems – two of them – and consists of two minute cameras mounted high in the windscreen some 20 centimetres apart within the central rear-view mirror. Stereo vision facilitates 3D imaging and functionalities such as road surface estimation – bumps and potholes can be detected and the suspension settings on each of the S-Class’s wheels adjusted automatically to give a smoother ride.

For the first time this year,

Rate of market penetration

Over a period of just half a dozen years, then, we have progressed from having just two to three vision-based functionalities to having eight or nine, each with significantly enriched capabilities. And where the discussion a few years ago was over whether one or two cameras might be carried by a vehicle, four is now possible: in the S-Class, the mirror-mounted stereo cameras are complemented by the two used by the night vision system, albeit these are customer-selected options and not necessarily standard fitment as yet.Trickle-down is already happening. The 2013 E-Class, Mercedes Benz’s mid-range offering, will feature many of the same technologies as the S-Class and, from next year, we can expect to see some of them on the new C-Class (Mercedes-Benz’s volume car, and equivalent to the

Next stages

Principle drivers of these developments have come from the computational field – that old friend of“Traffic sign recognition has been around for about nearly a decade. If we compare progress, then we’ve moved from a solution which generated several mistakes an hour to one is pretty much fool-proof. Pedestrian detection, if one compares 10 years of research, is several orders of magnitude better; the false-positive ‘ghosts’ are gone, courtesy of better vision solutions but also because of improved processing and algorithms,” says Dariu Gavrila, senior research scientist in the Department of Environmental Perception at Daimler AG.

In answer to the “What’s next?” question, he says that autonomous driving will be a focus of Daimler’s research in the coming years.

Driverless vehicles have grabbed many a headline recently, courtesy of the efforts of companies such as

Progression towards autonomy

At present, Steering Assist works on straight or slightly curved roads; it checks every 10 seconds whether the driver still has their hands on the steering wheel and even greater autonomy could be available in production form by the time the next-generation Mercedes-Benz S-Class is fielded.It is possible to envisage a structured environment in which vehicle autonomy is introduced gradually, starting with the highway and parking environments and progressing to those more complex situations. Indeed, it should be possible in the not-too-distant future for the person in the driving seat to accomplish some non-essential tasks. For example, in the absence of the ability to stop the practice completely in some parts of the world, texting while driving need not be the significant safety issue it is currently.

But in truth full autonomous driving is still a fair way off. A limiting factor at present is the legal framework, principally the Vienna Convention, which dictates that a driver remains in control of the vehicle at all times (and which governs that hands-on check, every 10 seconds, within Steering Assist). Changes to the law are going to be needed before we can fully realise the technology’s potential.

An overview of Daimler’s Intelligent Drive is provided <%$Linker:

A search on